How does everyday use of AI as a support tool complicate the idea that AI will replace human thinking and effort?

UCL - BA MEDIA - 25/26

This website puts into light a digital ethnography that explores the daily use of artificial intelligence. The main public debats of our society frame AI like a harmful technology that could eventually replace human reflexion, thoughts and efforts in divers fields. This project aims to analyse and engage with these frequent misconceptions while examining how AI is in reality used as a tool in studies or in the work life but also in other personal contexts that explore the personal sphere.

By using autoethnographic methods and other digital materials such as screenshots or field notes, this project depicts that in reality AI isn’t often used as a replacement of work or human reflection as we think. It is actually the opposite, AI is used as a support tool, and depending on the generated prompts, are either understood, corrected or even rejected by the users. Using real life scenarios and lived experiences instead of interpreting or using predictions that could be abstract or false, this project highlights how human judgment and free will remain central in the uses of artificial intelligence.

This project analyses the daily uses that a student could have of AI as a academic support or/and as a guide in personal situation that don’t involve the work sphere. Instead of focusing on a speculative interpretation of AI, this targets the use of artificial intelligence in contexts like an assist in higher education, moments of doubts, time pressure or even emotional stress.

AI is perceived here not as an autonomous entity but as a tool that we interact with, that we evaluate, that we analyse and also constrict. This focus is when AI is used, what do we trust it with and where do we put limits.

This project uses autoethnography as a primary research method and it involves an analysis of the researcher’s own experience with the use of AI to understand the fundamental aspects of it’s use.

As this is an autoethnographic project, I am the sole participant involved and all the material used do not contain either personal or sensitive information.

Data was gathered through personal notes in moments of use of AI, screenshots of artificial intelligence prompts and responses and the analysis of either accepting, modify or reject the responses.

This project is an emersion into the daily use of AI in the life of a student at university and focusing on the daily encounters with AI technologies to explore how human judgment and effort remains absolutely necessary in practice.

The common idea we dismantle in this project is the statement that "AI will replace us". This narrative often assumes and translate into the belief that the fast paced evolution and autonomous strength of AI is going to lead to the disappearance of human labour, reflection and critical thinking. However, ethnographic perspectives actually counter this argument and show that technology does not act on its own but is shaped by how people use it in everyday life and in social and institutional contexts.

By examining how AI is used more as a support tool rather than an autonomous replacement, this project illustrates that human effort and thinking isn’t erased or replaced but redistributed. Users still remain responsible for their interpretation, facts verifications, accuracy, ethical judgment and creative decision making. This project allows to put into light this invisible type of labour.

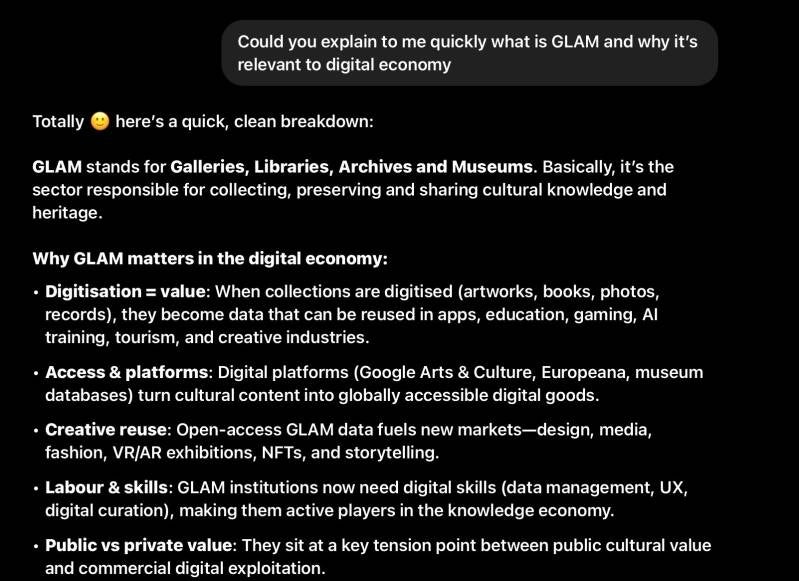

This screenshot shows a moment where I used AI to help clarify academic content efficiently. The response was helpful although I still needed to take some time to make sure I understood everything correctly. It simplified the content but additional research was necessary yo grasp all the notions. This shows how AI can be used as a tool to support reflexion rather than replacing it.

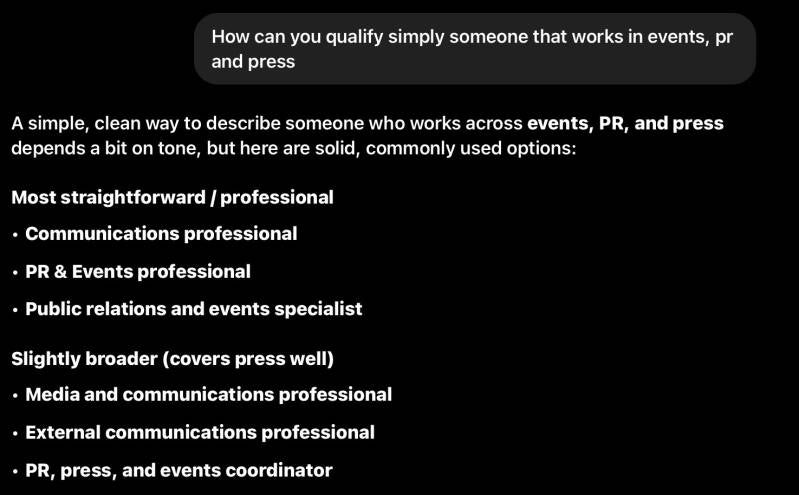

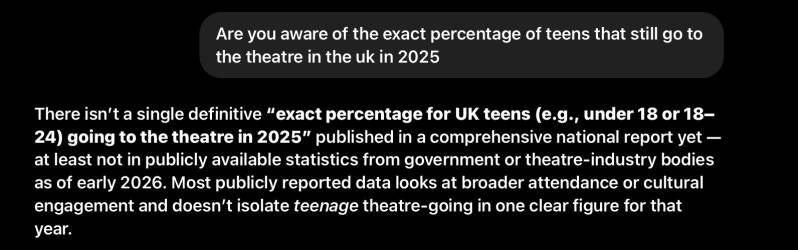

This screenshot shows a moment where the AI provided a clear answer on the outside although I realised quickly that it was lacking precision and seemed too vague. I proceeded to narrow down my questions instead of just accepting the first output draft. I wanted to make sure that it was accurate, so those extra step were necessary. This shows the role we have when we evaluate an answer provided by artificial intelligence.

This screenshot shows a moment where the AI generated prompt was rewriten with my own words so I could use it as a personal revision material. It is often necessary to correct and modify the content we obtain with AI and adapt it to maximise it’s efficacy. (Subject of the screenshot n1)

This screenshot shows a moment where the AI generated response was not used due to uncertainty and a fear of unreliability. It is also important to keep ethical concerns in mind as well when we use those tools, especially in academic context where plagiarism is severely reprimended. This shows again that AI isn’t operating independently but that it depends on human judgment.

The ethnographic materials used suggest that AI isn’t operating independently from human thinking. While AI is indeed often used to save time and efficiently clarify information, AI’s outputs are very rarely trusted without any form of correction or verification behind them.

The fear of dependence, rather than the fear of replacement emerges as a central and rooted concern. Users set boundaries and barriers when it comes to creativity and authorship and it shows us that AI is indeed treated as a tool rather than a complete substitute for human creation and responsibility.

Instead of confirming the belief that AI will replace human thinking and effort, this project shows how AI actually reshapes where and how effort is inputed. Human labour does become less visible but it is still essential when it comes to decision making, ethical judgment and how we control the technology more than it controls us. By analysing and focusing on everyday practice, this ethnography reveals why base level narrative about AI replacing humans deeply fails to capture the actual complexity of daily AI use.

Create Your Own Website With Webador